Spencer Wright

Designing an AI Chatbot

Created an AI chatbot that improved completion by 33% and gained beta launch approval.

Product

Embark App

Team

2 Designers

1 Project Manager

2 Developers

Role

Design lead

Timeline

8 weeks

Overview

Imagine learning a new language in just six weeks—then moving abroad to teach and communicate daily. That’s the challenge missionaries face at the MTC.

To prepare, they use Embark, our in-house language-learning app. It covered vocabulary and grammar—but offered no way to practice speaking on their own.

This case study shows how I designed an AI chatbot that enabled real conversation practice, improved completion rates by 33%, and earned stakeholder approval for beta launch.

My impact

Improvement to completion rate

Improved conversation completion rate by 33% after design updates

Beta launch approved

Greenlit by key stakeholders

Background

Problem

Embark offered strong tools for learning vocabulary, grammar, and listening comprehension—but no way to practice conversations independently. Missionaries could speak during class, but lacked flexible, on-demand speaking practice that fit their schedule and needs.

Goal

Develop an ai chatbot that:

- Mimics real-life conversations for immersive language practice.

- Is simple enough for new learners to use effectively.

- Secures approval for a beta launch.

Brainstorming

Exploring design directions

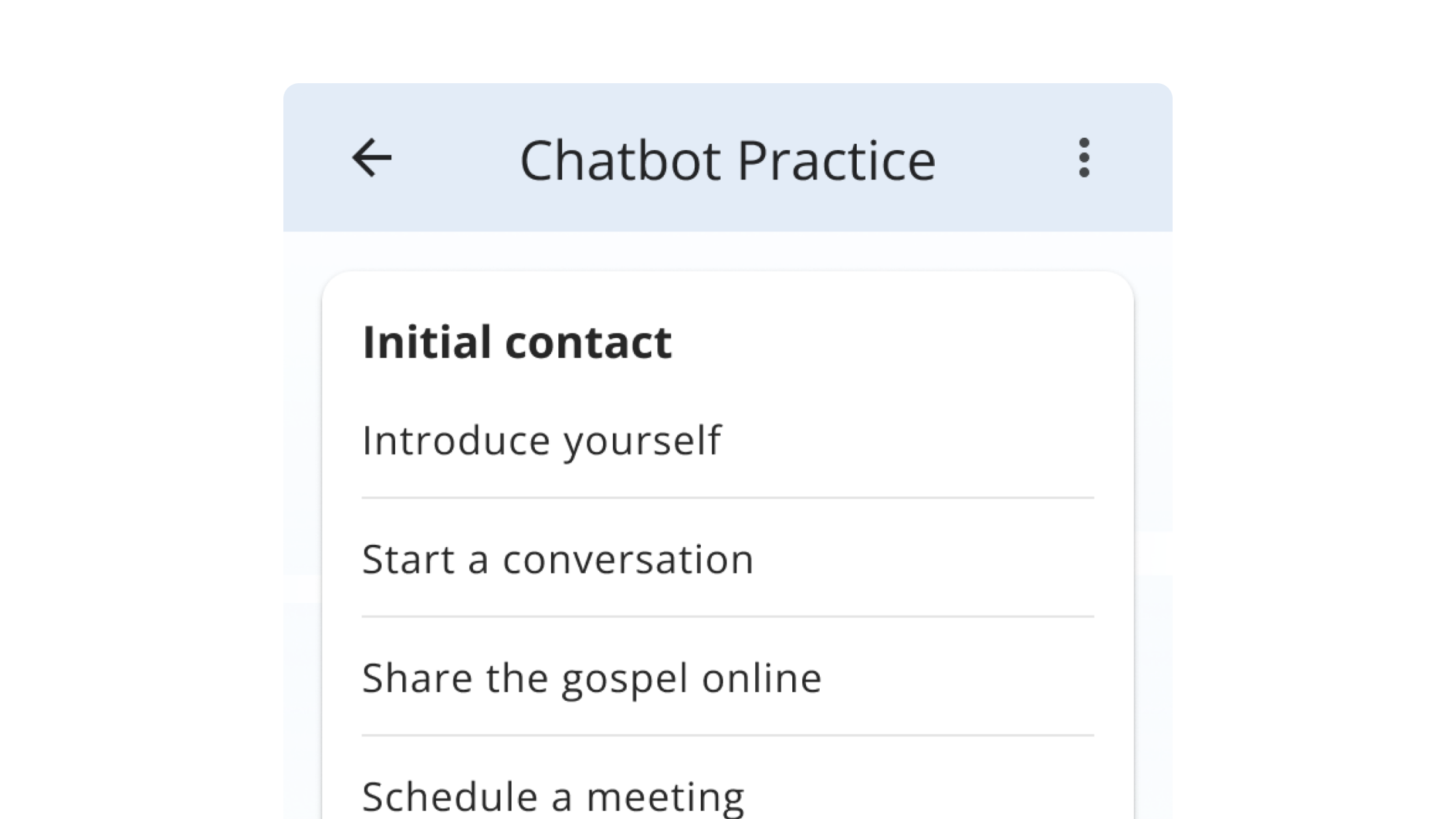

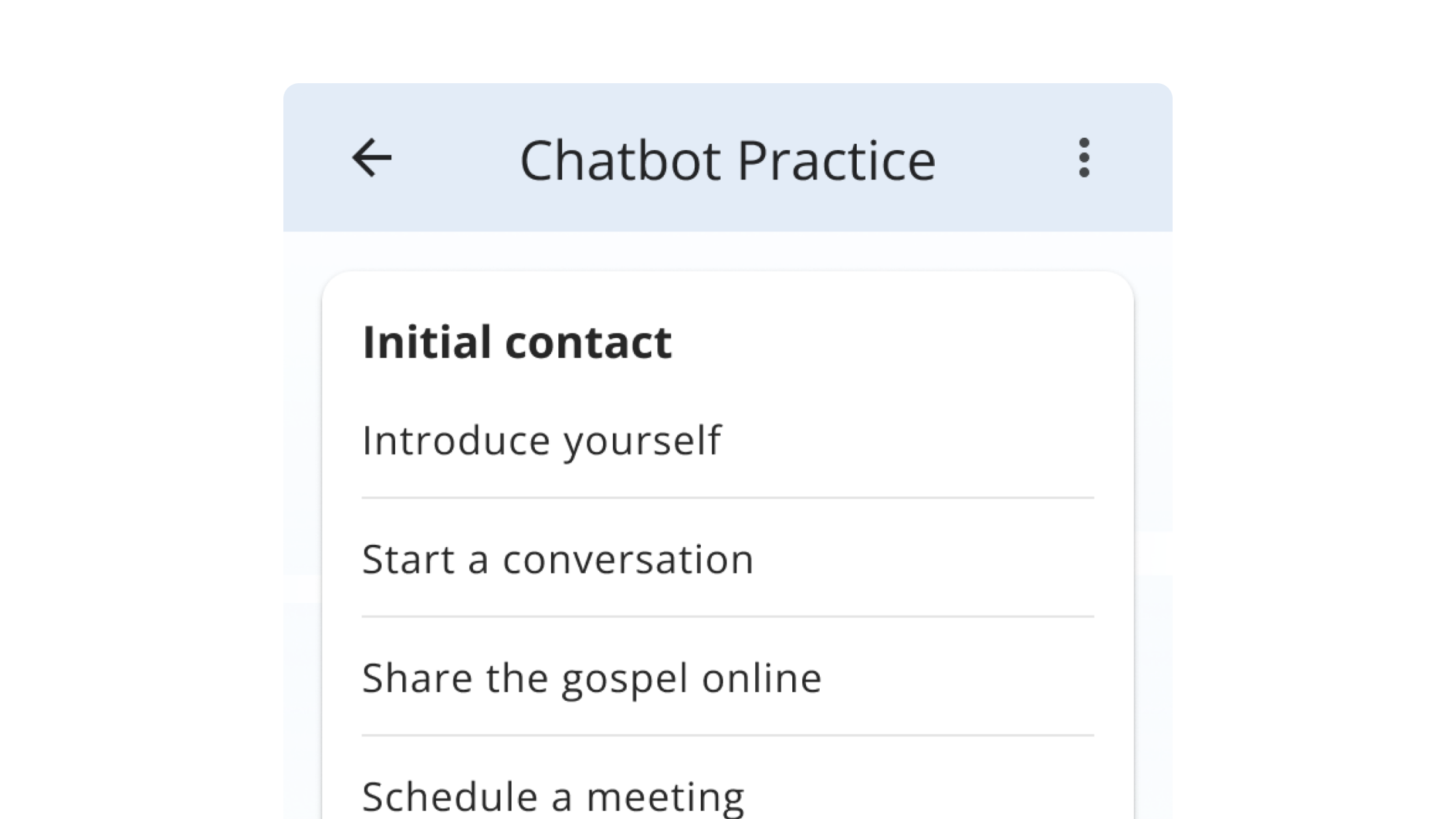

We explored two design directions for how missionaries could practice conversations: one focused on flexibility, the other on guided progression.

Chosen

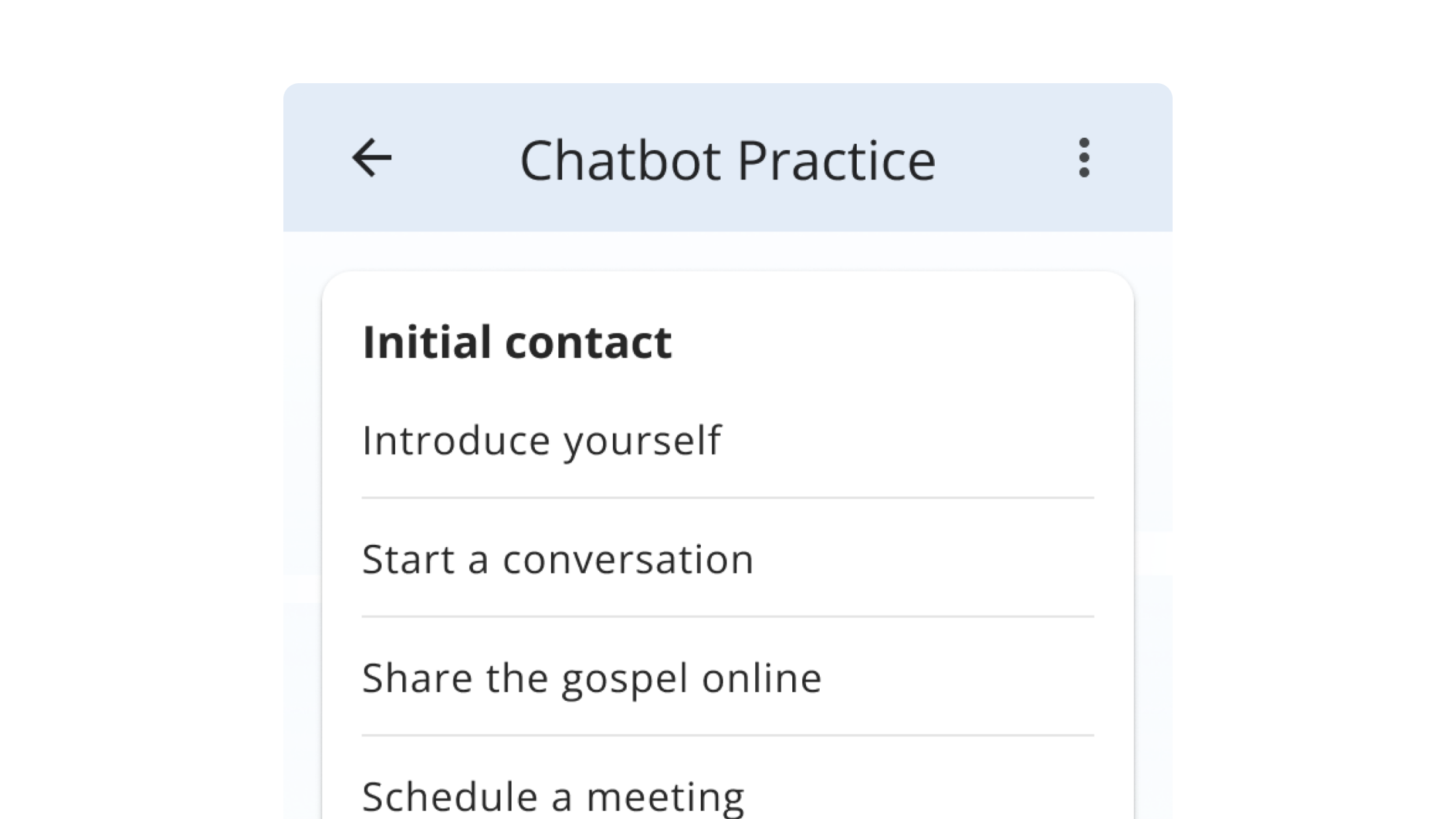

Scenario practice

Repeatable, mission-specific conversations

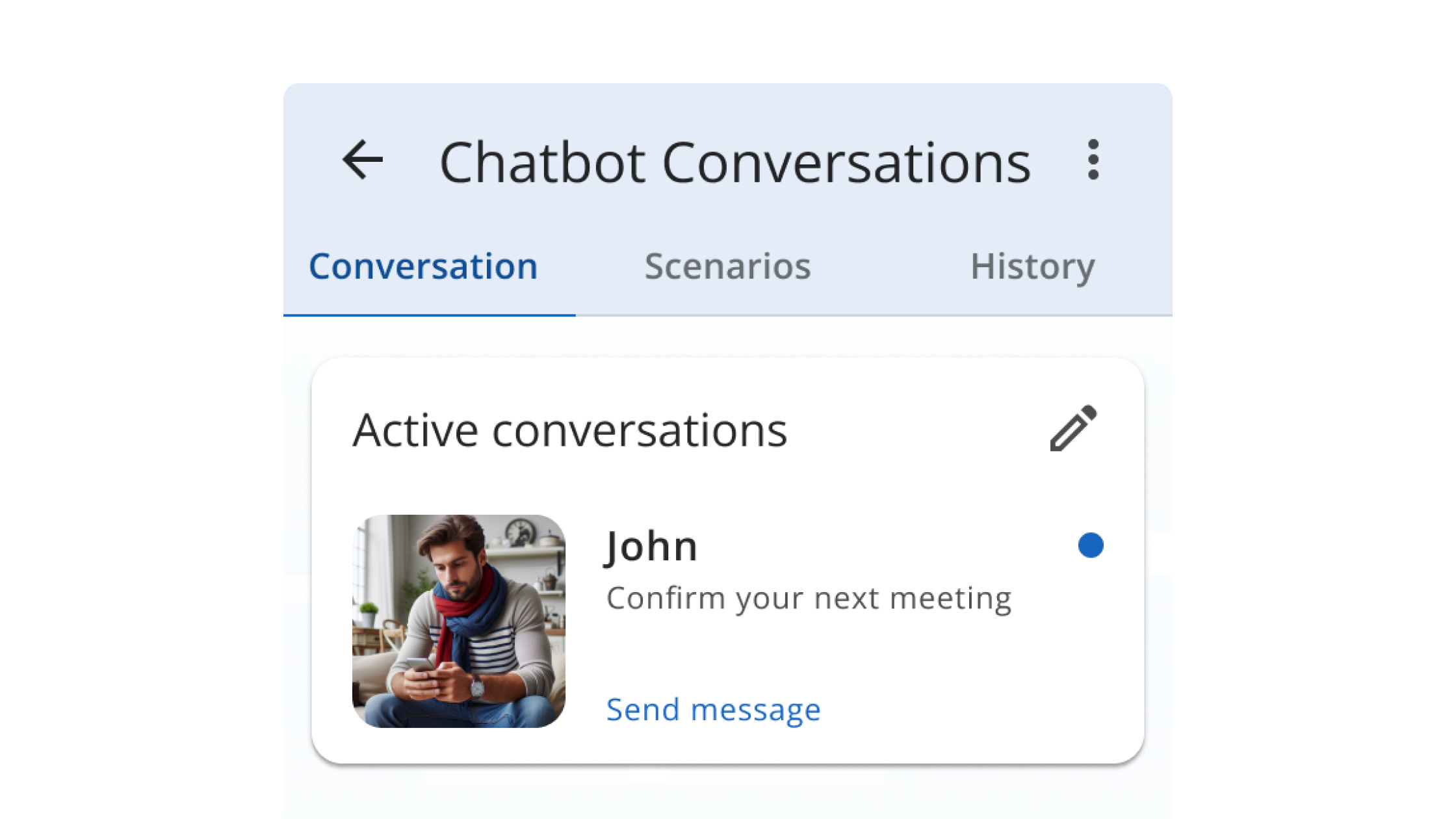

- Users practice key scenarios (ordering food, setting appointments, sharing scriptures).

- Each session is replayable with slight variations based on mission location.

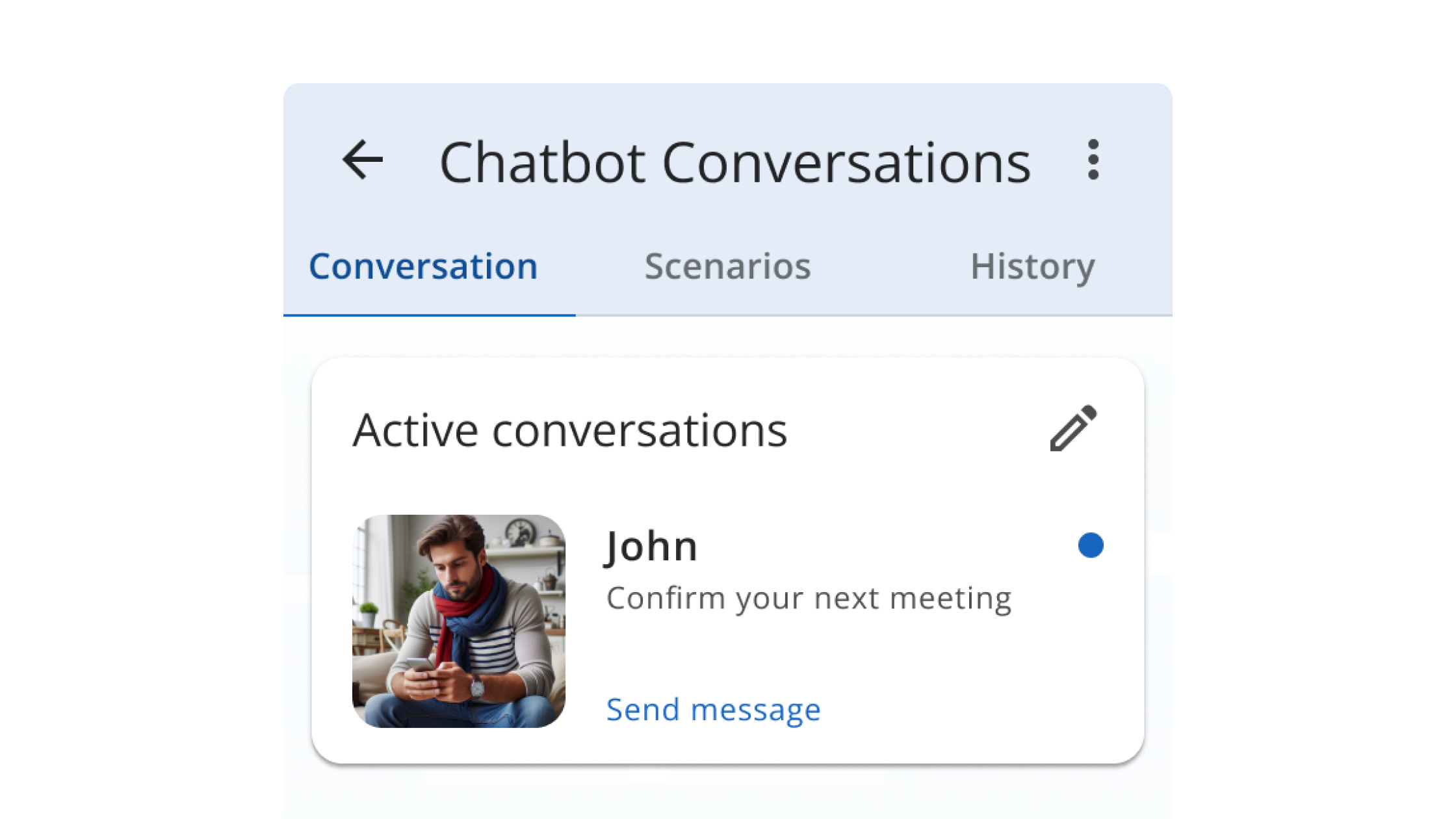

Character conversation

Story-driven, AI-guided dialogue

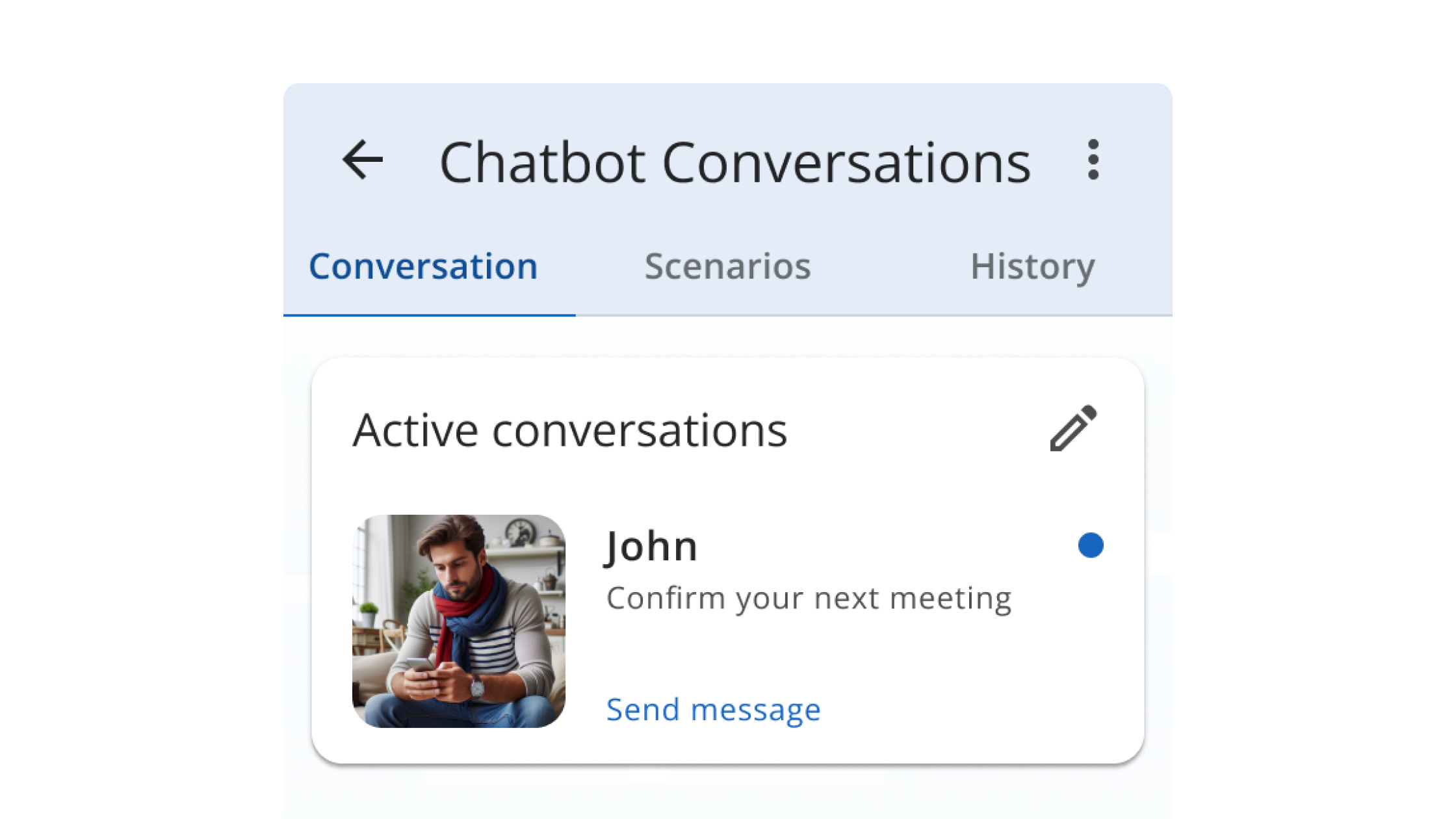

- AI guides missionaries through structured interactions with the same character (e.g., setting up meetings, giving lessons).

- Conversations follow a storyline that builds over time.

After reviewing these ideas with the team, we decided to focus on Scenario practice (Direction 1).

Why Scenario Practice?

More flexible

Missionaries could repeat scenarios until confident

Faster to launch

Easier to iterate and test as an MVP

Research

What kind of scenarios would be most helpful to missionaries?

To inform the content of the chatbot, I surveyed 50 missionaries about the types of conversations they found most useful. Their responses grouped into two key categories:

Teaching

Like setting up teaching appointments, sharing scriptures).

Daily life

Ordering food, asking for directions, scheduling appointments

These two categories directly shaped the types of scenarios included in the first chatbot prototype.

Design

With clear priorities from research and feedback, I designed a scenario-based chatbot with three core features that made practice realistic, adaptive, and easy for beginners.

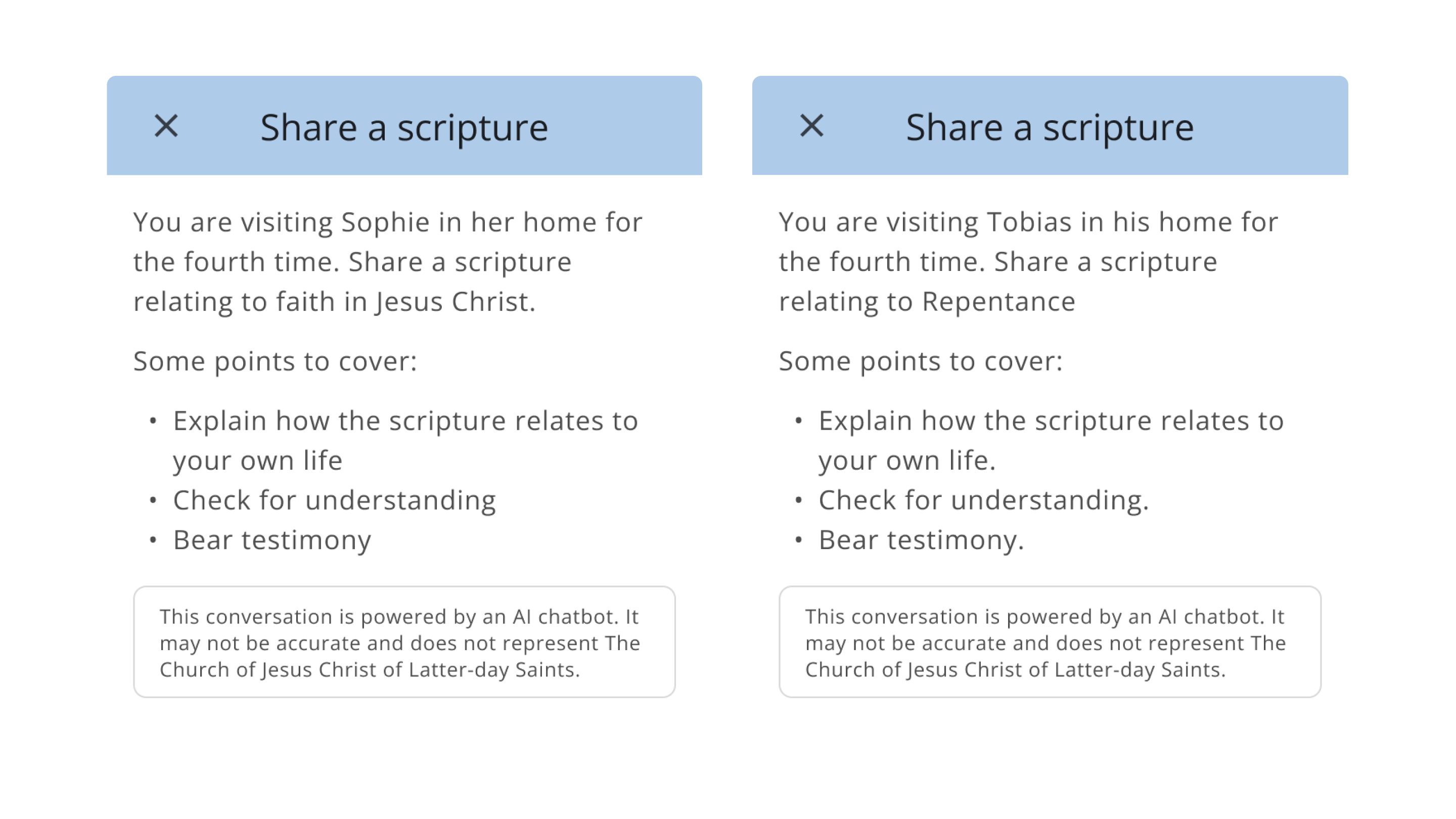

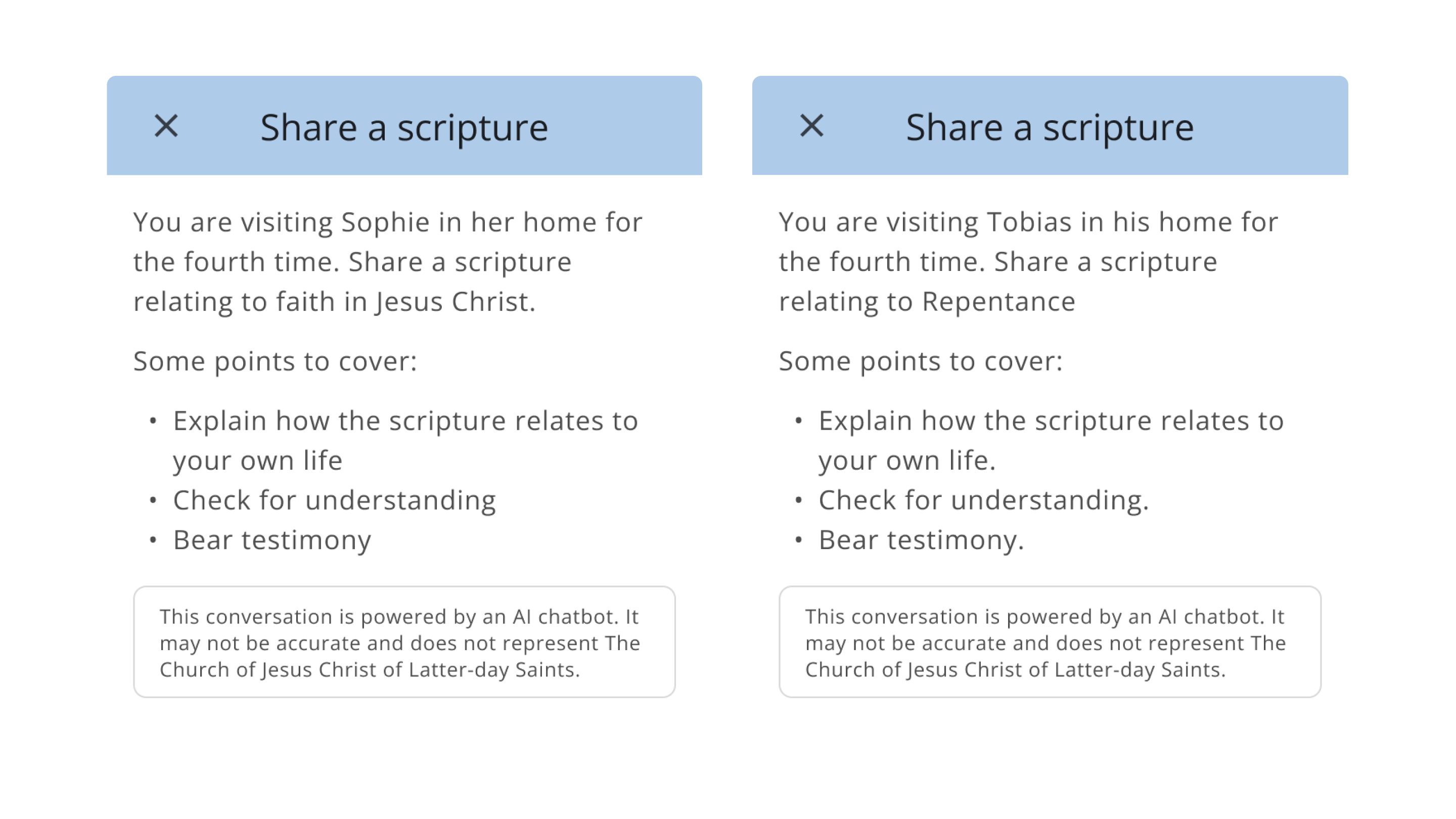

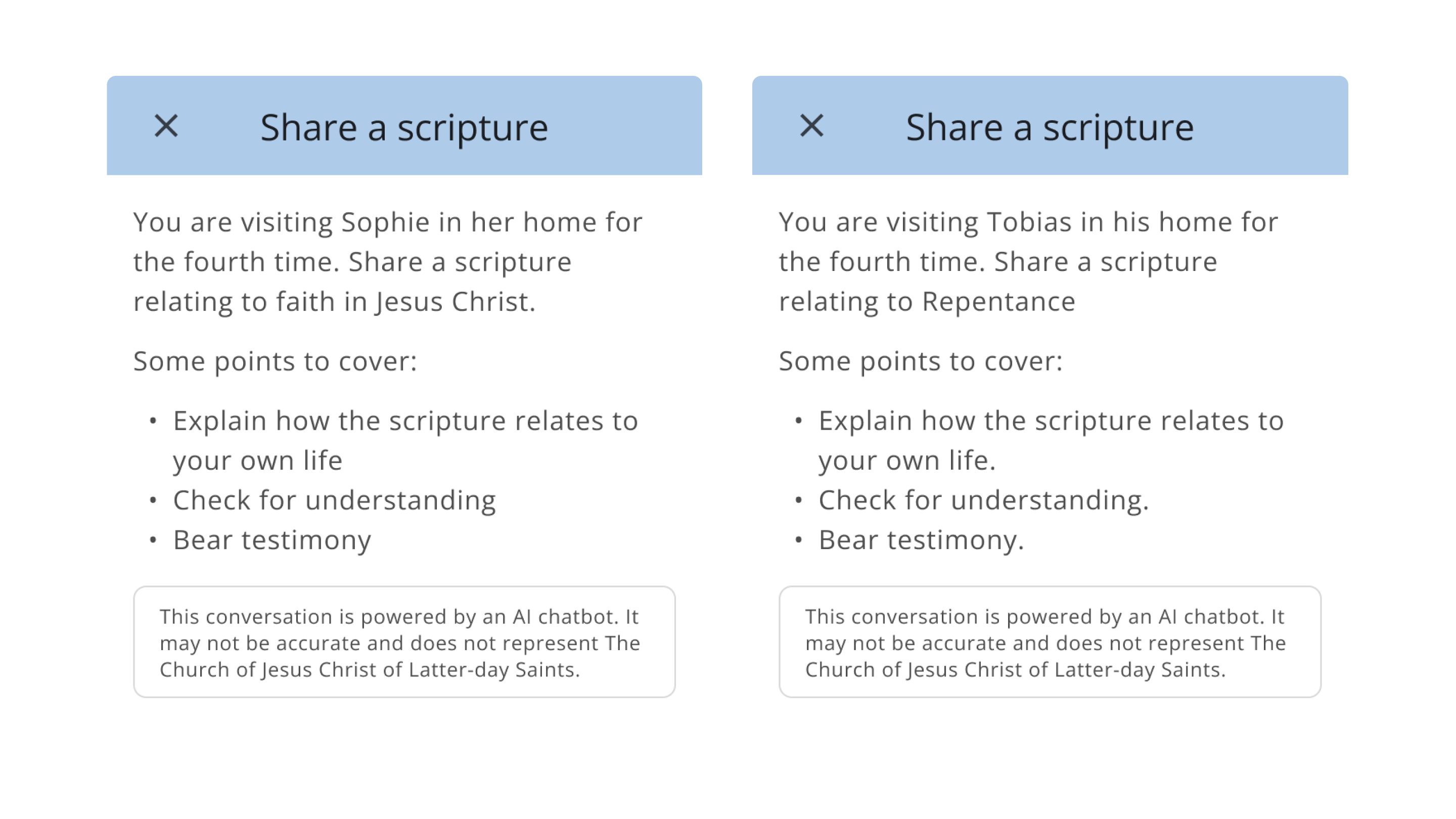

Replayable scenarios

Addressed a core need: missionaries wanted to practice conversations multiple times until they felt fluent. Each scenario could be repeated with slight variations and was personalized by mission location, helping learners gain confidence through realistic, flexible practice.

Two versions of the same scenario.

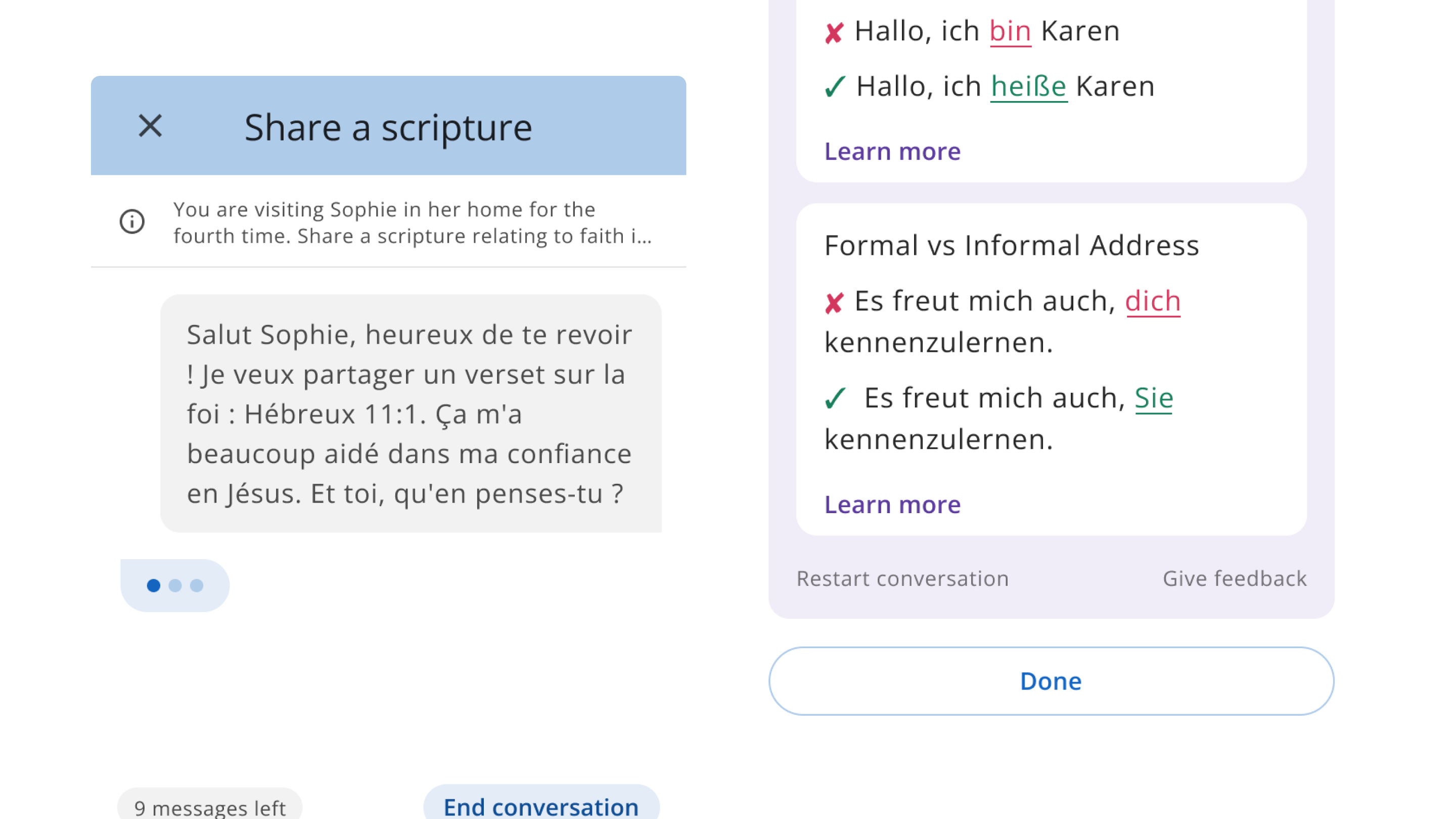

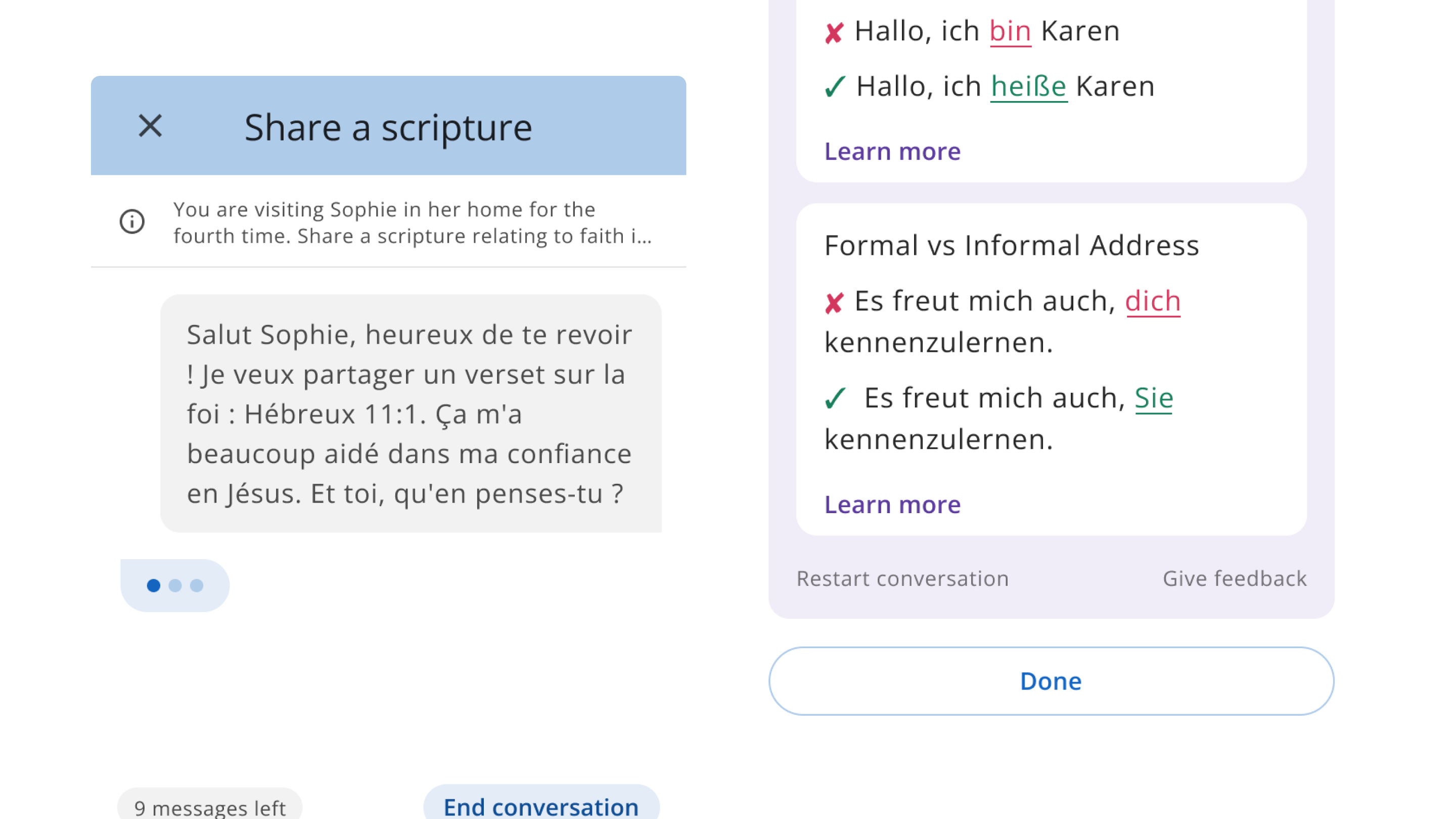

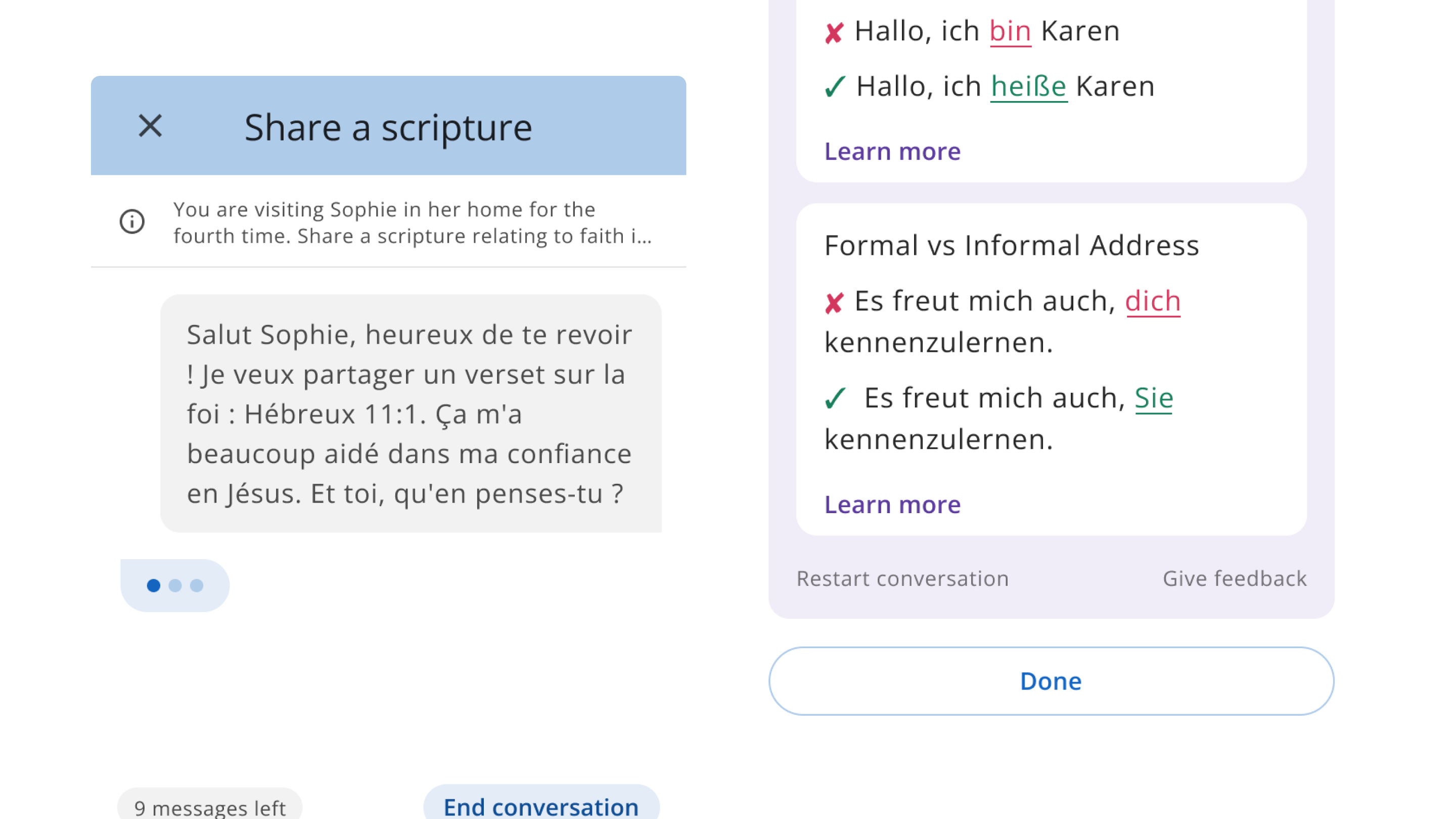

Instant feedback

Instant feedback helped users improve without a teacher present. The chatbot didn’t just correct mistakes—it explained why something was wrong, giving users the clarity and guidance they needed to adjust and progress in real time.

Feedback (Left) Learn more sheet (Right)

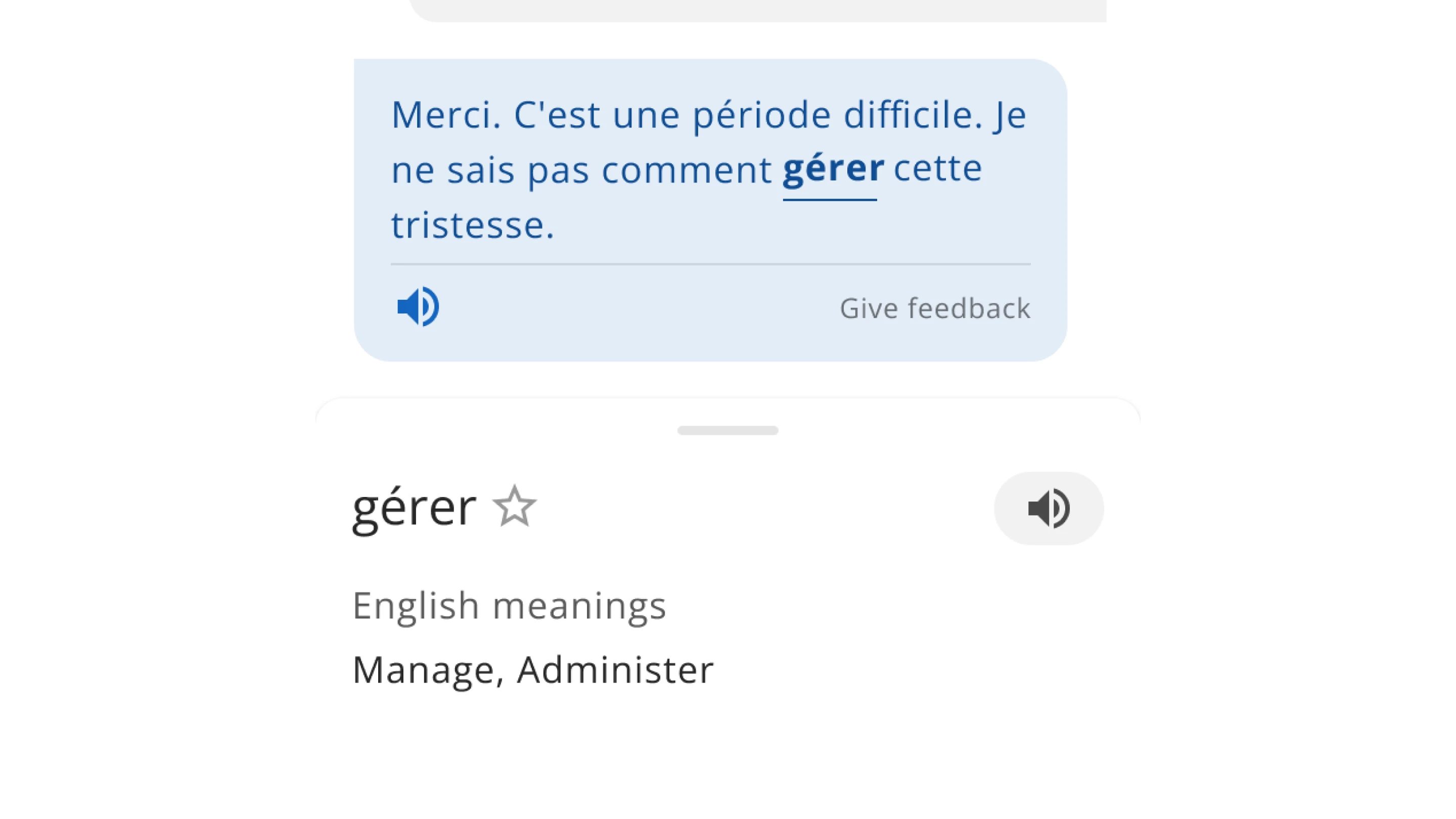

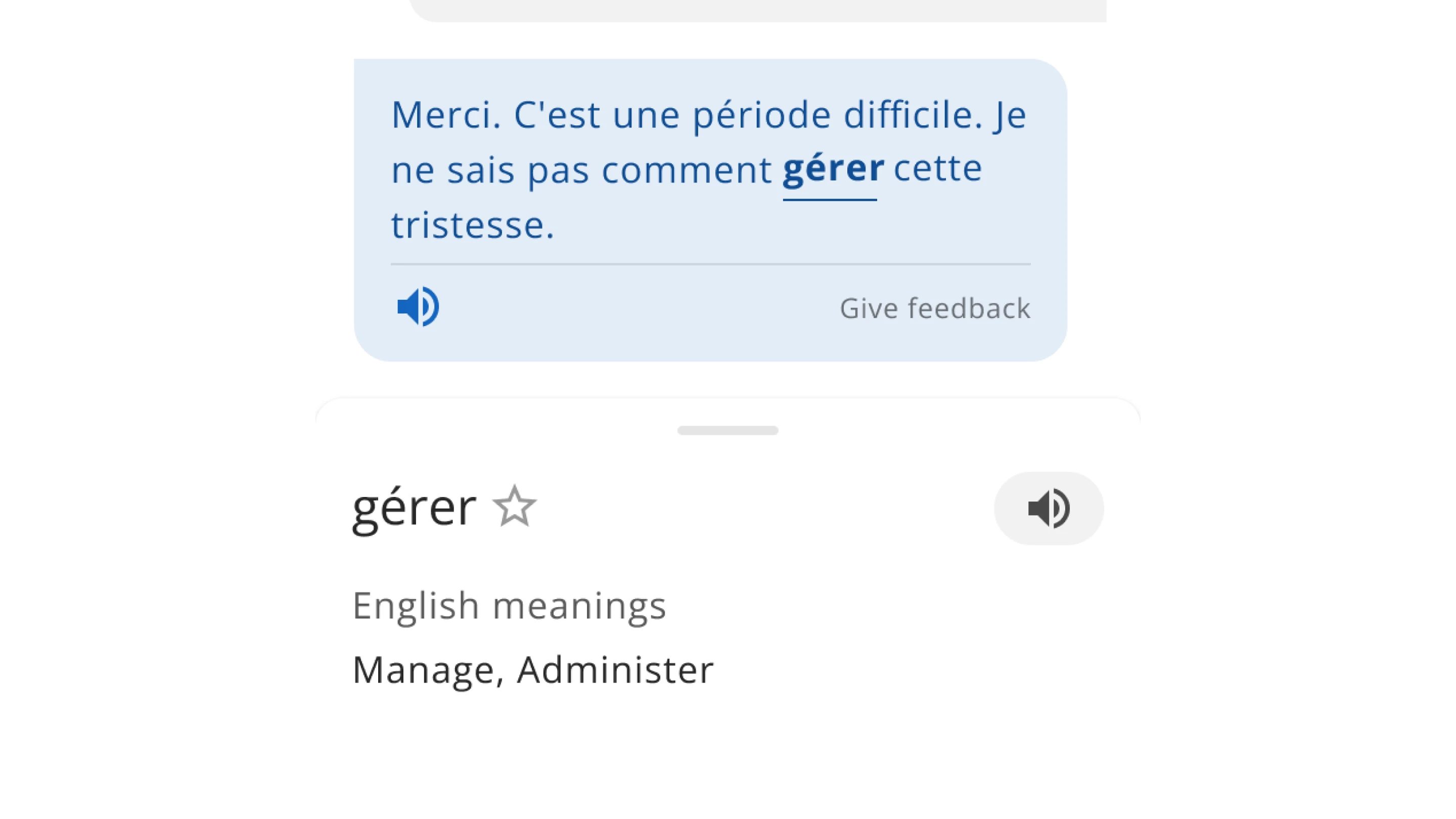

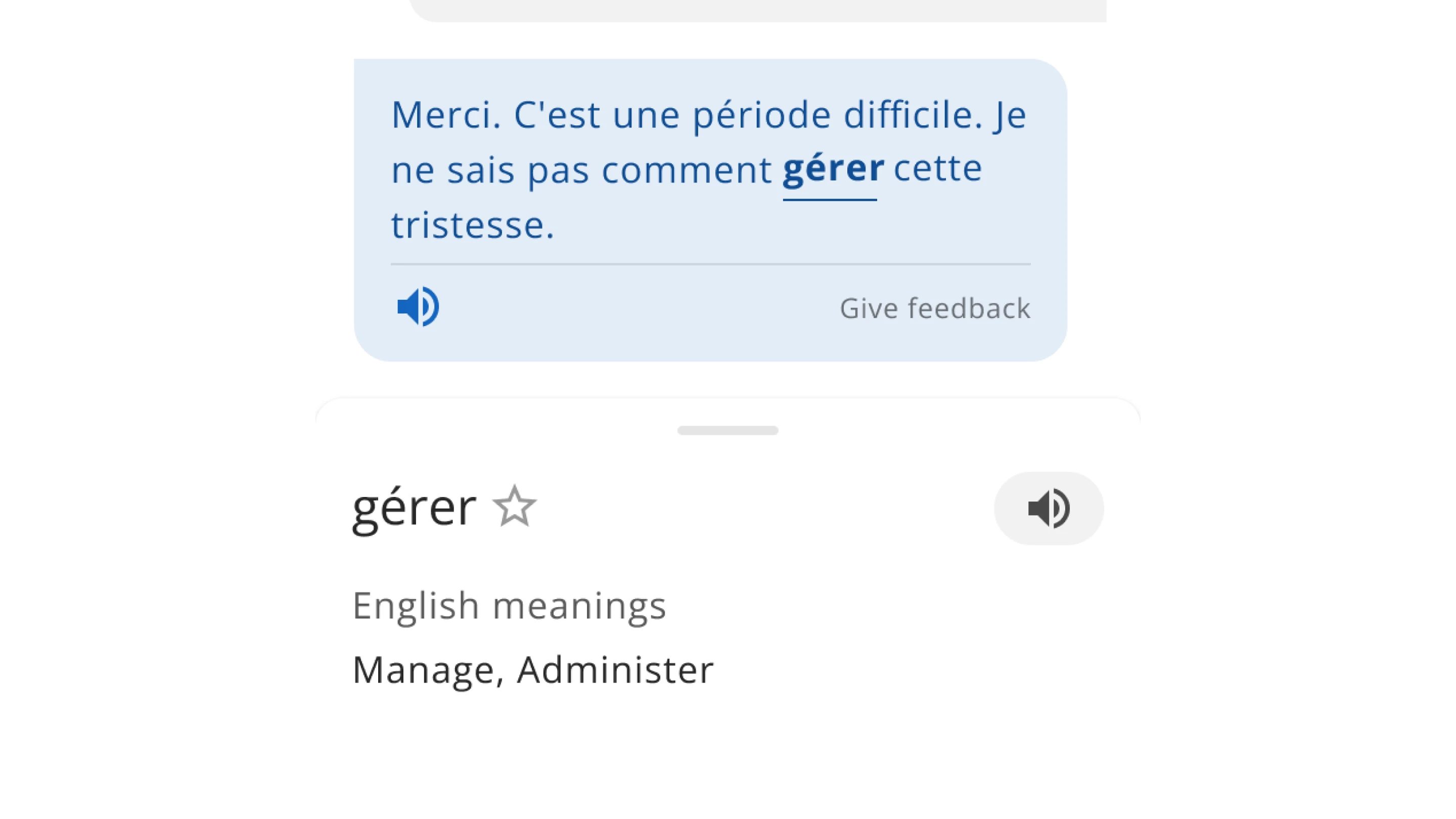

Tap for meaning

Tap for meaning supported learners when they got stuck on unfamiliar words. Missionaries could tap any word to see a translation and save it for later, keeping the conversation flowing without interruption.

Tap for meaning sheet.

Testing

To validate the design early, I tested a working prototype with 12 missionaries at the MTC. Our goal was to observe how new users interacted with the chatbot and identify any usability issues before moving forward.

Initial feedback

It was overwhelming for new missionaries

- The AI responses were too long and complex for beginners.

- 3 out of 12 users felt overwhelmed and quit mid-conversation.

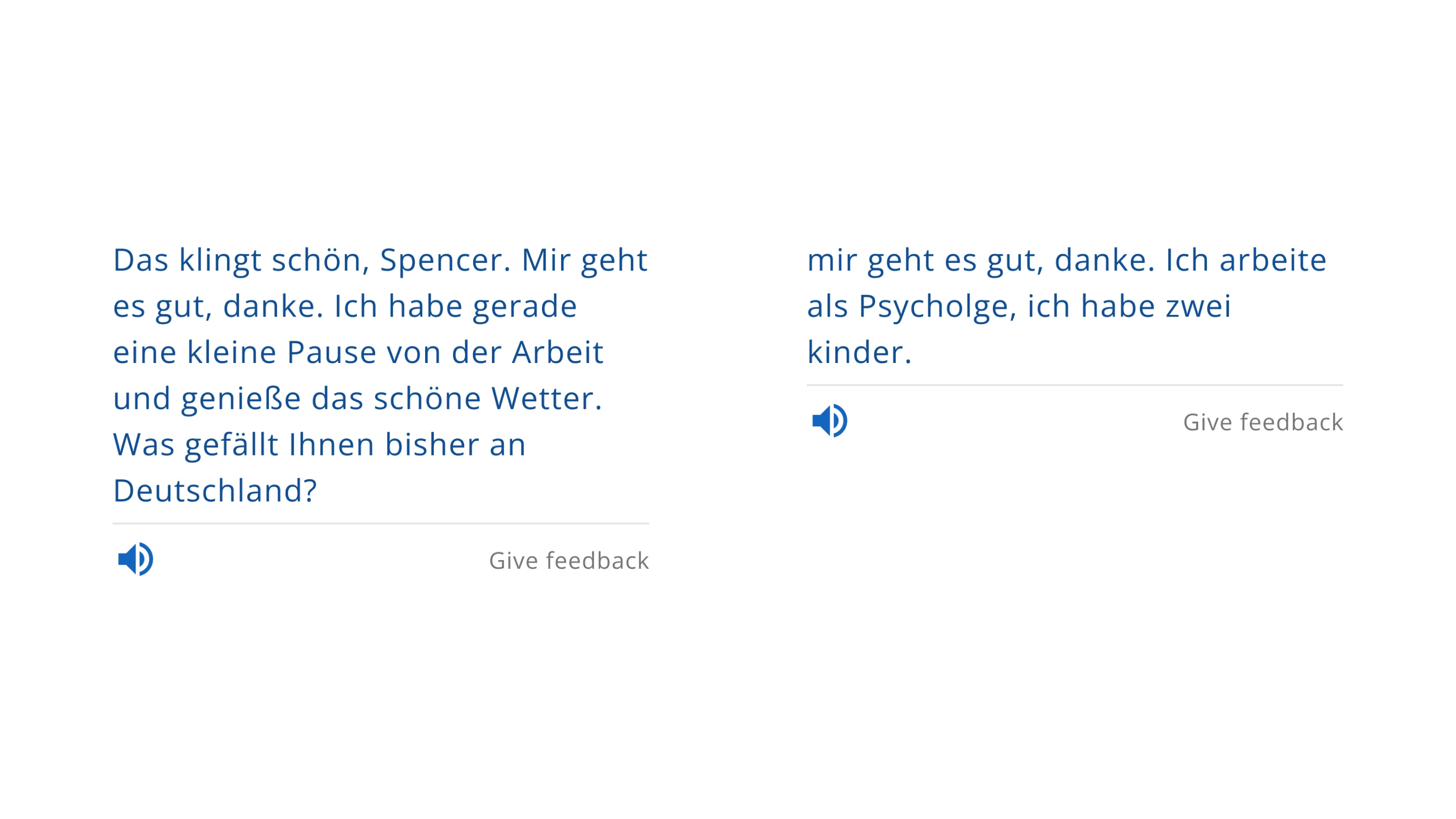

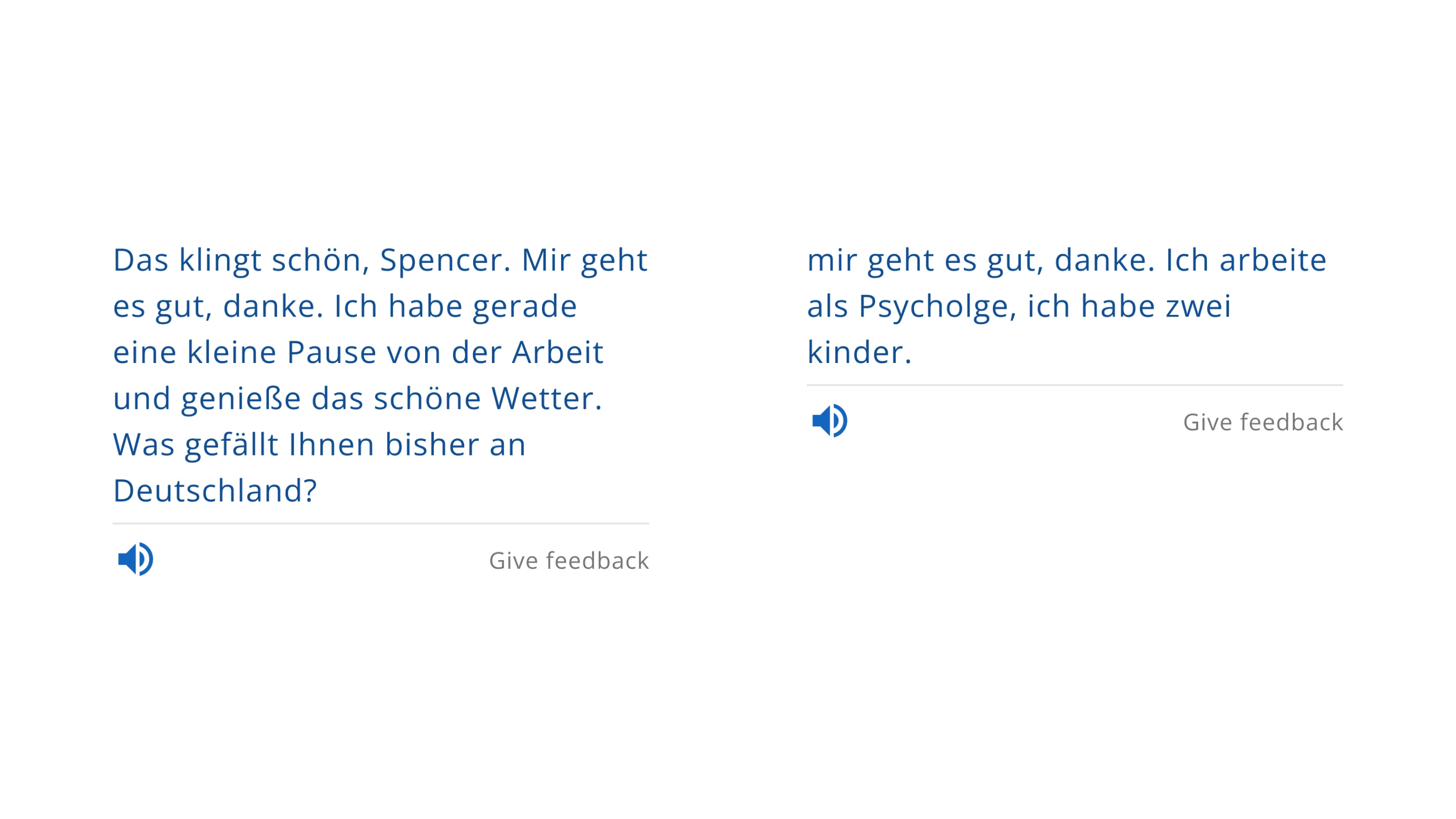

Improvements

Adding a Profiecncy feature

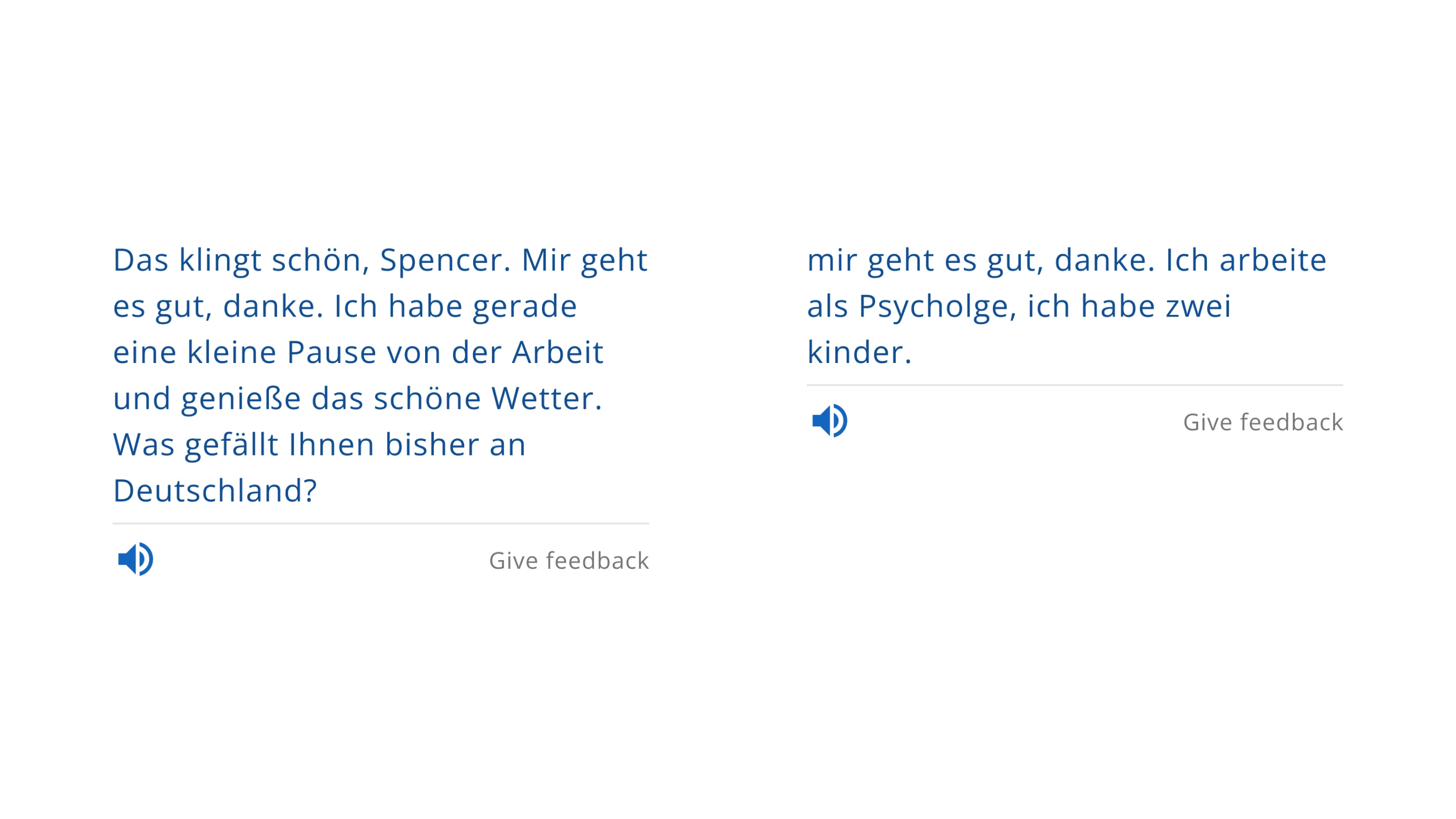

To address this, I collaborated with our machine learning engineer to add a “proficiency” feature. This allowed the chatbot to adjust its response complexity based on each user’s language level—making conversations more approachable and easier to complete.

Old default response (left) vs. updated beginner response (right)

Testing: Round 2

Proficiency levels improved completion rates by 33%

We ran a second test with 12 missionaries and saw a dramatic improvement:

- 100% completion rate (33% improvement).

- Users found the chatbot easier to use and more effective.

User feedback

“This feature is great. I like that it doesn’t just say something is wrong, but explains why, so I know how to fix it.”

Missionary learning Portuguese

Launch & next steps

We presented the updated chatbot to stakeholders and received approval for a beta launch in select languages.

We're now expanding support to more mission language groups and exploring a voice-only mode, allowing missionaries to practice fully spoken conversations without needing a partner.

Designing an AI Chatbot

Created an AI chatbot that improved completion by 33% and gained beta launch approval.

Product

Embark App

Team

2 Designers

1 Project Manager

2 Developers

Role

Design lead

Timeline

8 weeks

Overview

Imagine learning a new language in just six weeks—then moving abroad to teach and communicate daily. That’s the challenge missionaries face at the MTC.

To prepare, they use Embark, our in-house language-learning app. It covered vocabulary and grammar—but offered no way to practice speaking on their own.

This case study shows how I designed an AI chatbot that enabled real conversation practice, improved completion rates by 33%, and earned stakeholder approval for beta launch.

My impact

Improvement to completion rate

Improved conversation completion rate by 33% after design updates

Beta launch approved

Greenlit by key stakeholders

Background

Problem

Embark offered strong tools for learning vocabulary, grammar, and listening comprehension—but no way to practice conversations independently. Missionaries could speak during class, but lacked flexible, on-demand speaking practice that fit their schedule and needs.

Goal

Develop an ai chatbot that:

- Mimics real-life conversations for immersive language practice.

- Is simple enough for new learners to use effectively.

- Secures approval for a beta launch.

Brainstorming

Exploring design directions

We explored two design directions for how missionaries could practice conversations: one focused on flexibility, the other on guided progression.

Chosen

Scenario practice

Repeatable, mission-specific conversations

- Users practice key scenarios (ordering food, setting appointments, sharing scriptures).

- Each session is replayable with slight variations based on mission location.

Character conversation

Story-driven, AI-guided dialogue

- AI guides missionaries through structured interactions with the same character (e.g., setting up meetings, giving lessons).

- Conversations follow a storyline that builds over time.

After reviewing these ideas with the team, we decided to focus on Scenario practice (Direction 1).

Why Scenario Practice?

More flexible

Missionaries could repeat scenarios until confident

Faster to launch

Easier to iterate and test as an MVP

Research

What kind of scenarios would be most helpful to missionaries?

To inform the content of the chatbot, I surveyed 50 missionaries about the types of conversations they found most useful. Their responses grouped into two key categories:

Teaching

Like setting up teaching appointments, sharing scriptures).

Daily life

Ordering food, asking for directions, scheduling appointments

These two categories directly shaped the types of scenarios included in the first chatbot prototype.

Design

With clear priorities from research and feedback, I designed a scenario-based chatbot with three core features that made practice realistic, adaptive, and easy for beginners.

Replayable scenarios

Addressed a core need: missionaries wanted to practice conversations multiple times until they felt fluent. Each scenario could be repeated with slight variations and was personalized by mission location, helping learners gain confidence through realistic, flexible practice.

Two versions of the same scenario.

Instant feedback

Instant feedback helped users improve without a teacher present. The chatbot didn’t just correct mistakes—it explained why something was wrong, giving users the clarity and guidance they needed to adjust and progress in real time.

Feedback (Left) Learn more sheet (Right)

Tap for meaning

Tap for meaning supported learners when they got stuck on unfamiliar words. Missionaries could tap any word to see a translation and save it for later, keeping the conversation flowing without interruption.

Tap for meaning sheet.

Testing

To validate the design early, I tested a working prototype with 12 missionaries at the MTC. Our goal was to observe how new users interacted with the chatbot and identify any usability issues before moving forward.

Initial feedback

It was overwhelming for new missionaries

- The AI responses were too long and complex for beginners.

- 3 out of 12 users felt overwhelmed and quit mid-conversation.

Improvements

Adding a Profiecncy feature

To address this, I collaborated with our machine learning engineer to add a “proficiency” feature. This allowed the chatbot to adjust its response complexity based on each user’s language level—making conversations more approachable and easier to complete.

Old default response (left) vs. updated beginner response (right)

Testing: Round 2

Proficiency levels improved completion rates by 33%

We ran a second test with 12 missionaries and saw a dramatic improvement:

- 100% completion rate (33% improvement).

- Users found the chatbot easier to use and more effective.

User feedback

“This feature is great. I like that it doesn’t just say something is wrong, but explains why, so I know how to fix it.”

Missionary learning Portuguese

Launch & next steps

We presented the updated chatbot to stakeholders and received approval for a beta launch in select languages.

We're now expanding support to more mission language groups and exploring a voice-only mode, allowing missionaries to practice fully spoken conversations without needing a partner.

Designing an AI Chatbot

Created an AI chatbot that improved completion by 33% and gained beta launch approval.

Product

Embark App

Team

2 Designers

1 Project Manager

2 Developers

Role

Design lead

Timeline

8 weeks

Overview

Imagine learning a new language in just six weeks—then moving abroad to teach and communicate daily. That’s the challenge missionaries face at the MTC.

To prepare, they use Embark, our in-house language-learning app. It covered vocabulary and grammar—but offered no way to practice speaking on their own.

This case study shows how I designed an AI chatbot that enabled real conversation practice, improved completion rates by 33%, and earned stakeholder approval for beta launch.

My impact

Improvement to completion rate

Improved conversation completion rate by 33% after design updates

Beta launch approved

Greenlit by key stakeholders

Background

Problem

Embark offered strong tools for learning vocabulary, grammar, and listening comprehension—but no way to practice conversations independently. Missionaries could speak during class, but lacked flexible, on-demand speaking practice that fit their schedule and needs.

Goal

Develop an ai chatbot that:

- Mimics real-life conversations for immersive language practice.

- Is simple enough for new learners to use effectively.

- Secures approval for a beta launch.

Brainstorming

Exploring design directions

We explored two design directions for how missionaries could practice conversations: one focused on flexibility, the other on guided progression.

Chosen

Scenario practice

Repeatable, mission-specific conversations

- Users practice key scenarios (ordering food, setting appointments, sharing scriptures).

- Each session is replayable with slight variations based on mission location.

Character conversation

Story-driven, AI-guided dialogue

- AI guides missionaries through structured interactions with the same character (e.g., setting up meetings, giving lessons).

- Conversations follow a storyline that builds over time.

After reviewing these ideas with the team, we decided to focus on Scenario practice (Direction 1).

Why Scenario Practice?

More flexible

Missionaries could repeat scenarios until confident

Faster to launch

Easier to iterate and test as an MVP

Research

What kind of scenarios would be most helpful to missionaries?

To inform the content of the chatbot, I surveyed 50 missionaries about the types of conversations they found most useful. Their responses grouped into two key categories:

Teaching

Like setting up teaching appointments, sharing scriptures).

Daily life

Ordering food, asking for directions, scheduling appointments

These two categories directly shaped the types of scenarios included in the first chatbot prototype.

Design

With clear priorities from research and feedback, I designed a scenario-based chatbot with three core features that made practice realistic, adaptive, and easy for beginners.

Replayable scenarios

Addressed a core need: missionaries wanted to practice conversations multiple times until they felt fluent. Each scenario could be repeated with slight variations and was personalized by mission location, helping learners gain confidence through realistic, flexible practice.

Two versions of the same scenario.

Instant feedback

Instant feedback helped users improve without a teacher present. The chatbot didn’t just correct mistakes—it explained why something was wrong, giving users the clarity and guidance they needed to adjust and progress in real time.

Feedback (Left) Learn more sheet (Right)

Tap for meaning

Tap for meaning supported learners when they got stuck on unfamiliar words. Missionaries could tap any word to see a translation and save it for later, keeping the conversation flowing without interruption.

Tap for meaning sheet.

Testing

To validate the design early, I tested a working prototype with 12 missionaries at the MTC. Our goal was to observe how new users interacted with the chatbot and identify any usability issues before moving forward.

Initial feedback

It was overwhelming for new missionaries

- The AI responses were too long and complex for beginners.

- 3 out of 12 users felt overwhelmed and quit mid-conversation.

Improvements

Adding a Profiecncy feature

To address this, I collaborated with our machine learning engineer to add a “proficiency” feature. This allowed the chatbot to adjust its response complexity based on each user’s language level—making conversations more approachable and easier to complete.

Old default response (left) vs. updated beginner response (right)

Testing: Round 2

Proficiency levels improved completion rates by 33%

We ran a second test with 12 missionaries and saw a dramatic improvement:

- 100% completion rate (33% improvement).

- Users found the chatbot easier to use and more effective.

User feedback

“This feature is great. I like that it doesn’t just say something is wrong, but explains why, so I know how to fix it.”

Missionary learning Portuguese

Launch & next steps

We presented the updated chatbot to stakeholders and received approval for a beta launch in select languages.

We're now expanding support to more mission language groups and exploring a voice-only mode, allowing missionaries to practice fully spoken conversations without needing a partner.